New Google AI Research Proposes Deep Thinking Scale to Improve LLM Accuracy While Cutting Total Innovation Costs in Half

A few years ago, the world of AI followed a simple rule: if you want a Large-Scale Language Model (LLM) to solve a complex problem, do Chain of Thought (CoT) long. But new research from University of Virginia again Google proves that ‘thinking long’ is not the same as ‘thinking hard’.

A research team revealed that simply adding feedback tokens can actually make an AI Underneath accurate. Instead of counting words, Google researchers introduced a new measure: i Critical Thinking Rating (DTR).

‘Token Maxing’ failure‘

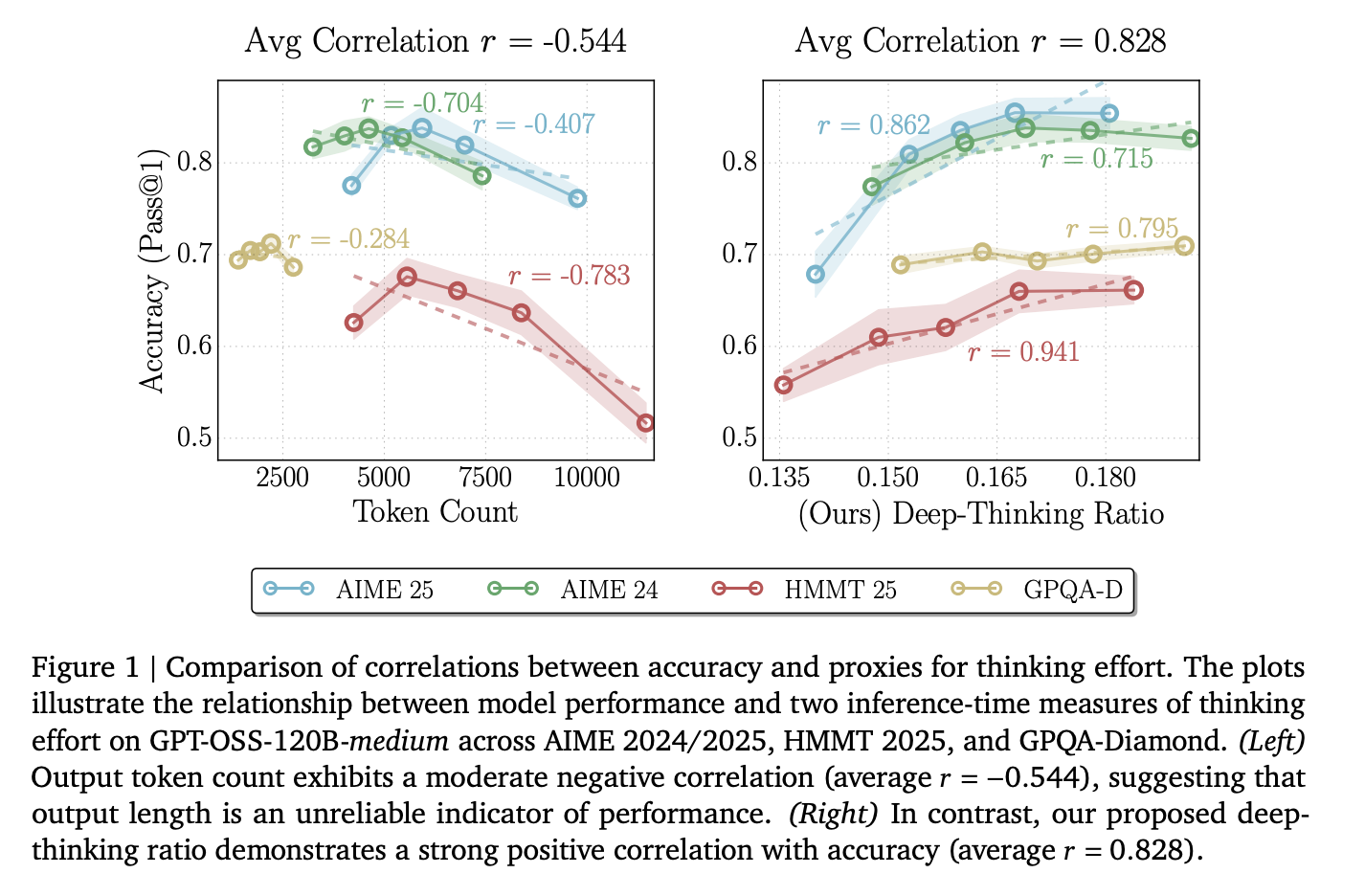

Developers often use the number of tokens as a proxy for the effort an AI puts into a task. However, the researchers found that the number of raw tokens has an average convergence of r = -0.59 with precision.

This negative number means that as the model produces more text, it is likely to be in error. This happens due to ‘overthinking,’ where the model gets stuck, repeats unnecessary steps, or magnifies its errors. Relying on length alone wastes valuable money on uninformed tokens.

What are Critical Thinking Tokens?

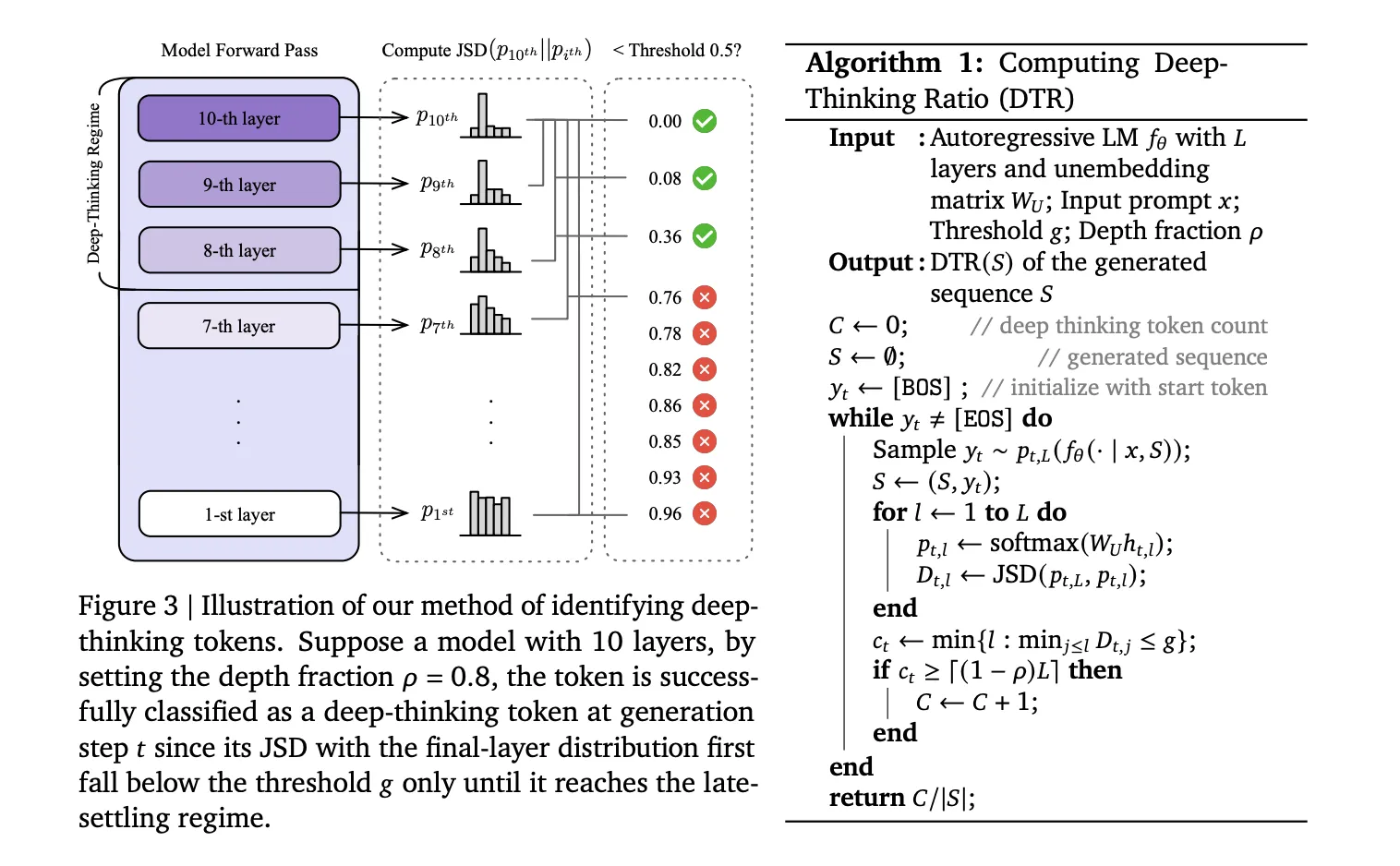

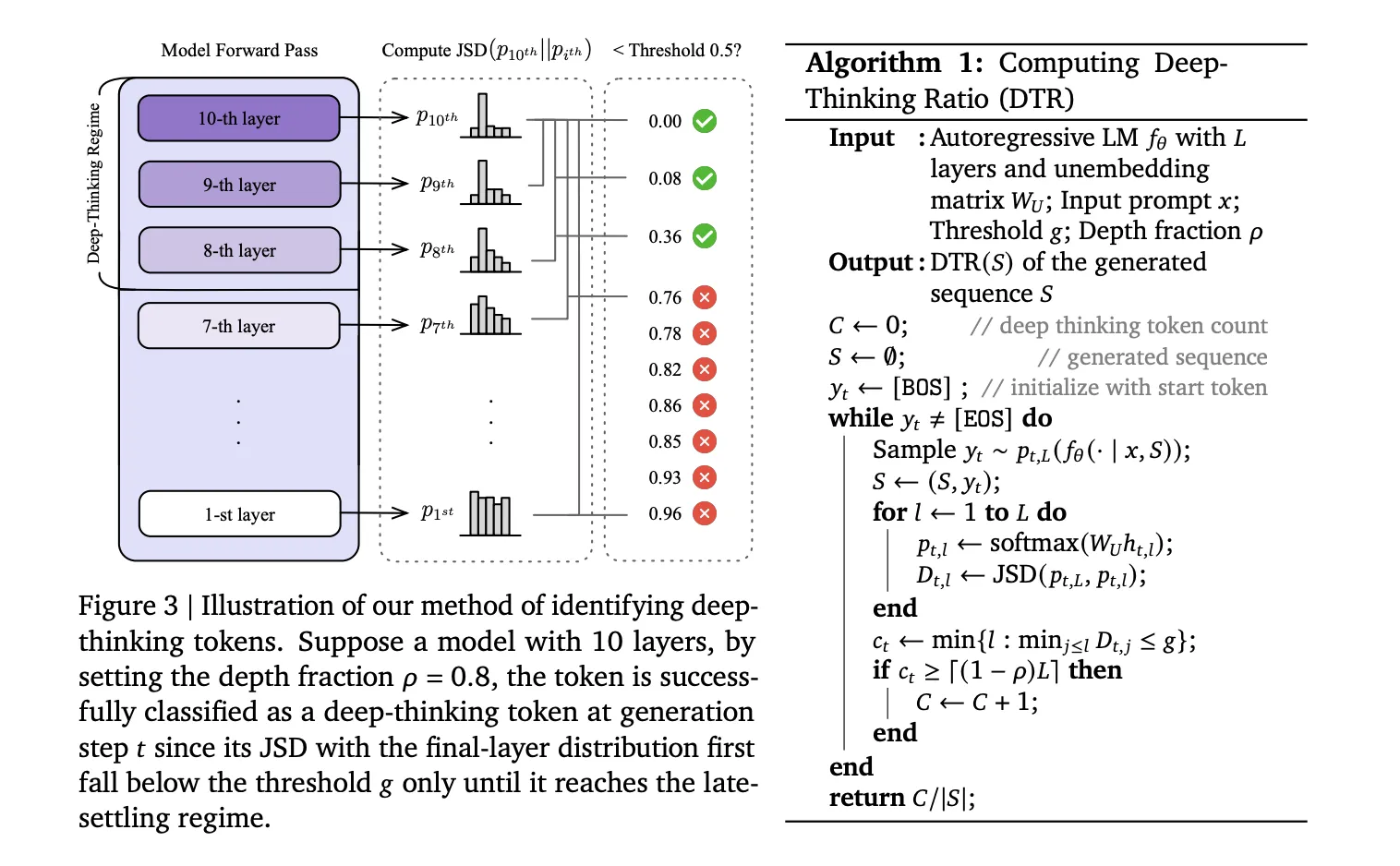

The research team argued that the real ‘thinking’ happens within the layers of the model, not just in the final output. When a model predicts a token, it processes the data through a series of transformer layers (L).

- Shallow tokens: In simple words, the model prediction stabilizes in advance. The ‘projection’ does not change much from layer 5 to layer 36.

- Tokens for critical thinking: In a complex sense or mathematical symbols, prediction changes the deeper layers.

How to Measure Depth

To identify these tokens, the research team uses a method of peering into the model’s internal ‘draft’ at every layer. They form central hidden regions (htl) in the vocabulary area by using the model The opening matrix (WU). This creates opportunities for distribution (egt.l) throughout the layer.

Then they counted i Jensen-Shannon Divergence (JSD) between spreading the middle layer and spreading the last layer (pt, L):

Dt.l := JSD(pt, L || pt.l)

The symbol is a a sign of deep thinking if your prediction only stabilizes in the ‘late phase’—defined by a fractional depth (⍴). In their tests, they put ⍴= 0.85, which means that the token is stable only in the last 15% of layers.

I Critical Thinking Rating (DTR) the percentage of these ‘hard’ tokens in the full sequence. All models are the same DeepSeek-R1-70B, Qwen3-30B-Thinkingagain GPT-OSS-120Bthe DTR showed a strong moderate correlation of r = 0.683 with precision.

Think@n: Better Accuracy at 50% of the Cost

The research team used this new method to create Think@na new way to measure AI performance during decision making.

Many devs use Stability (Cons@n)when they make samples 48 different answers and use multiple polls to choose the best one. This is very expensive because you have to generate every single token for every response.

Think@n changes the game by using ‘premature suspension’:

- The model begins to generate multiple candidate responses.

- Right after 50 start tokensthe system calculates the DTR for each candidate.

- It quickly stops producing ‘unpromising’ candidates with a low DTR.

- It only eliminates candidates with deep thinking scores.

Results in AIME 2025

| The way | Accuracy | Average. Cost (k tokens) |

| Evil@n (Most votes) | 92.7% | 307.6 |

| Think@n (DTR based selection) | 94.7% | 155.4 |

You have AIME 25 Mathematical benchmark, Think@n achieved high accuracy than conventional voting while reducing the cost of consideration by 49%.

Key Takeaways

- Token counting is a poor predictor of accuracy: The length of the raw output has a moderate negative correlation (r = -0.59) with performance, which means that long thinking sequences tend to signal ‘overthinking’ rather than high quality.

- Tokens of critical thinking that define true effort: Unlike simple tokens that are stable at early stages, deep-thinking tokens are those whose internal predictions undergo critical revisions of deep model layers before converging.

- The Deep-Thinking Ratio (DTR) is a top metric: The DTR measures the proportion of tokens of critical thinking respectively and shows a strong positive correlation with accuracy (average r = 0.683), which always exceeds the bases based on length or based on confidence.

- Think@n enables effective test timing: By prioritizing and finalizing only samples with high ratings of critical thinking, the Think@n strategy matches or exceeds the performance of conventional majority voting (Cons@n).

- Significant cost reduction by early termination: Because DTR can be measured from a short start of just 50 tokens, unpromising generations can be rejected early, reducing the total token cost by about 50%.

Check it out Paper. Also, feel free to follow us Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to Our newspaper. Wait! are you on telegram? now you can join us on telegram too.