Artificial Intelligence in 2026: What’s New and What’s Next (Trends, Use Cases & How to Prepare)

Artificial Intelligence in 2026: What’s New and What’s Next (Trends, Use Cases & How to Prepare)

·

AI & Future Tech

In 2026, AI shifts from “cool tool” to **system-level infrastructure**—think AI‑native development, multi‑agent workflows, domain‑specific models, **physical AI** in devices/robots, and **security by design**. Below, we break down the trends, real examples, and a 90‑day plan to get your team ready.

Trend #1 — AI goes system‑level: AI‑native development & multi‑agent workflows

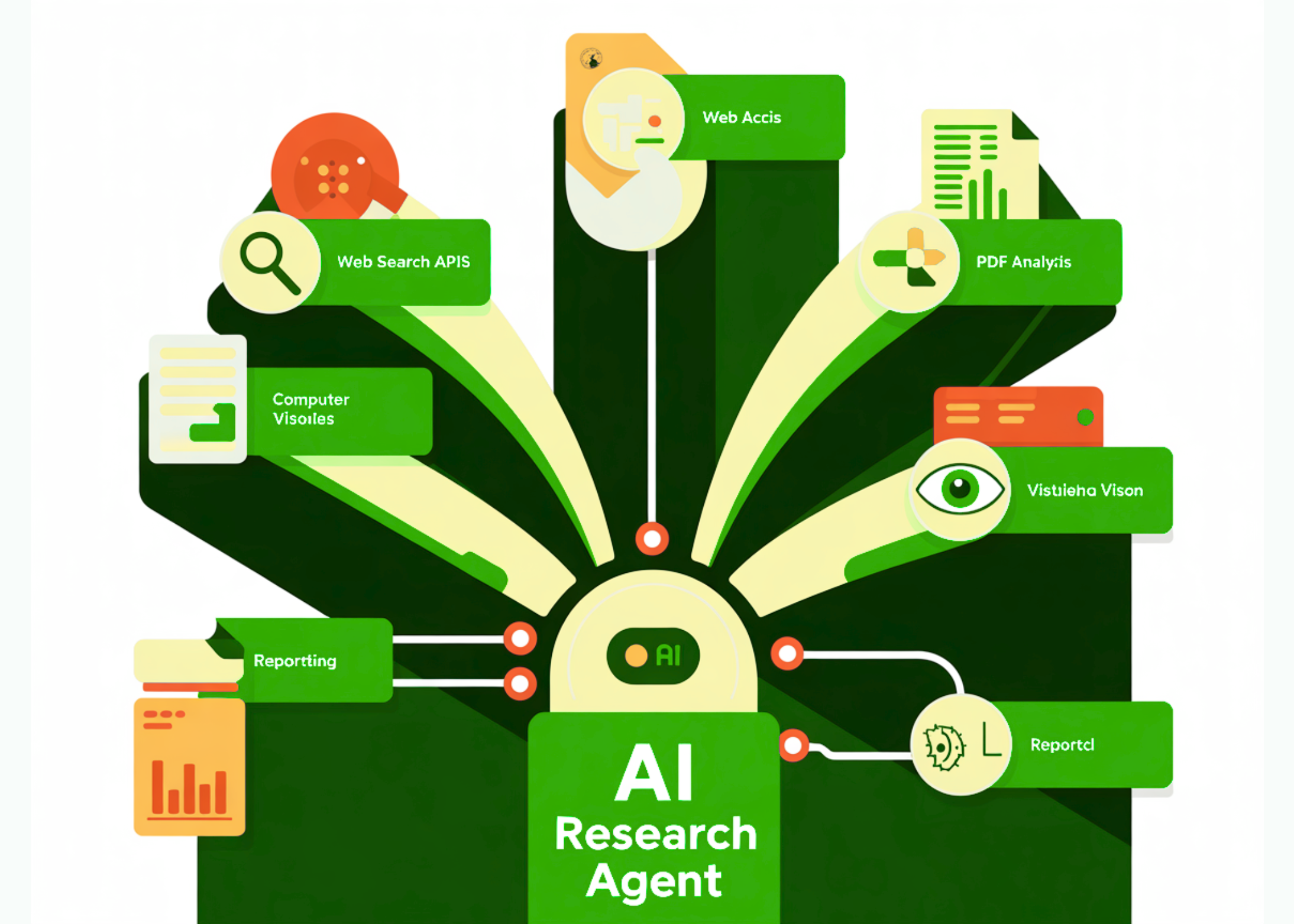

In 2026, AI shifts from isolated copilots to **AI‑native development platforms** and **multi‑agent systems** that plan, coordinate, and execute tasks end‑to‑end. This isn’t just “prompting”—it’s software + data + tools orchestrated by agents, with humans steering outcomes. Major analysts put AI‑native platforms, AI supercomputing, and multi‑agent systems among the year’s top strategic trends.

- AI‑native development platforms help smaller, cross‑functional teams build faster by pairing domain experts with AI, while **AI supercomputing** stacks mix CPUs/GPUs/ASICs to run complex workloads efficiently.

- Multi‑agent systems enable specialized agents to collaborate, handing off subtasks (research → draft → check → deploy) with auditability and human oversight.

Pro tip: Design processes as “agent‑legible” workflows (clear steps, tool scopes, guardrails, and logs). That’s how you get safe speed.

Related: AI guides on TechSparking · How to build your first AI agent (beginner tutorial)

Trend #2 — Domain‑specific models & open ecosystems rise

Expect a surge of **domain‑specific language models (DSLMs)** tuned for finance, healthcare, legal, and ops—often cheaper and more accurate for niche tasks than generic LLMs. Open‑weight ecosystems (including notable Chinese models) are also gaining traction due to customization and cost flexibility for startups and integrators.

Pro tip: For regulated workflows, test a DSLM alongside a general LLM. Measure accuracy, latency, and compliance—then route tasks accordingly.

Trend #3 — Physical AI: devices, robots & smart glasses

“Physical AI” moves intelligence into the real world—robots, drones, and AI‑enhanced devices. Consumer **smart glasses** are poised for a bigger year with better displays, on‑device assistants, and longer battery life, while AI‑ready chips push more reasoning to the edge.

Note: Physical/assistant devices raise privacy and safety expectations—design for consent, logging, and local processing where feasible.

Trend #4 — Security goes proactive: AI security platforms

As AI agents gain permissions, organizations need **AI‑security platforms** to defend against prompt injection, data leakage, and “rogue agents.” Security teams are preparing for both sides of AI: **defense automation** and **AI‑accelerated threats** (from deepfakes to agent‑driven intrusion).

- Give every agent a unique identity, scoped permissions, auditable tool usage, and circuit breakers.

- Adopt preemptive cybersecurity and provenance controls to trace models, data, and outputs.

Trend #5 — Enterprise reality: adoption broadens, scaling still lags

Most companies now use AI in at least one function, but **many are still stuck in pilots**. Leaders who see impact focus on workflow redesign, measurable business cases, and responsible guardrails—turning agents from demos into durable systems.

Pro tip: Tie every AI initiative to a P&L lever (revenue, cost, risk), and redesign the process—not just the interface.

What’s next: 6 practical predictions for 2026

- Agent teammates go mainstream in ops, support, analytics—outcome‑driven, not task‑by‑task.

- Context windows and memory vaults get larger → more bespoke, continuous workflows.

- Edge reasoning grows on AI PCs/phones/glasses for privacy and latency wins.

- GEO/AEO (Generative/Answer Engine Optimization) becomes essential for visibility in AI answers.

- AI security becomes board‑level with dedicated platforms, playbooks, and audits.

- Vertical AI outperforms general models in regulated, high‑stakes workflows.

Your 90‑day action plan

Days 1–30: Foundation & fast wins

- Pick 2–3 processes (support triage, sales research, reporting). Map steps/tools/permissions.

- Pilot a **multi‑agent** workflow with hard guardrails (read‑only first, then scoped writes).

- Stand up **AI security basics**: agent identities, logging, prompt safety tests, data labeling.

- Publish an **AI Policy** page (transparency → trust) and update your **Privacy Policy**.

Days 31–60: Scale what works

- Route tasks by **model fit**: general LLM vs. DSLM (measure accuracy/cost/latency).

- Move to **human‑in‑the‑loop** approvals for higher‑risk actions (emails, file writes, transactions).

- Implement **GEO/AEO**: add FAQ blocks, citations, entity‑rich headings, and JSON‑LD.

Days 61–90: Productionize

- Automate monitoring: drift checks, red‑team prompts, misuse alerts; review logs weekly.

- Expand to an **edge device** use case (mobile/PC) for latency/privacy gains if applicable.

- Quarterly business review: connect agent metrics → revenue/cost/risk KPIs.

Want a custom 30‑day TechSparking editorial & implementation plan? Contact us.

FAQs

- What’s the single biggest AI shift in 2026?

- AI becomes **system‑level**: AI‑native development, multi‑agent orchestration, and physical AI—plus proactive security and governance.

- Should we switch to domain‑specific models?

- Test both. DSLMs can beat general models on accuracy/compliance for niche tasks; keep a general model for open‑ended reasoning.

- How do we appear in AI answers (GEO/AEO)?

- Structure content for machines: clear answers in first 200 words, entity‑rich headings, JSON‑LD, authoritative citations, and FAQ blocks.

- What are the top AI security steps?

- Per‑agent identities and permissions, prompt/response logging, data classification, sandboxed tools, red‑teaming, and kill switches.